When dealing with model optimization in deep learning, the “RuntimeError: grad can be implicitly created only for scalar outputs” poses a perplexing challenge. This error, often masquerading as ‘grad can only be implicitly created for scalar outputs,’ sheds light on the intricacies of automatic differentiation in frameworks like PyTorch.

Contents

- 1 What is RuntimeError: grad can be implicitly created only for scalar outputs?

- 2 Why does this RuntimeError: grad can, be implicitly created only for scalar outputs to occur?

- 3 How do you resolve RuntimeError: grad can be implicitly created only for scalar outputs?

- 4 Using gradient

- 5 FAQs

- 6 Conclusion

- 7 References

What is RuntimeError: grad can be implicitly created only for scalar outputs?

The error message “RuntimeError: “usually happens as an automatic differentiation inside a deep learning library such as PyTorch or TensorFlow where only grad can be implicitly created for scalar outputs.”

A common case of this RuntimeError: grad can be implicitly created only for scalar outputs arises during computation of gradients for a non-scalar (a tensor with multiple elements) output when used in functions that expect a scalar output. Most automatic differentiation frameworks are geared toward scaling rather than optimizing a scalar variable.

This will involve analyzing some code samples showing when and where this error can be encountered.

import torch

x = torch.tensor([5., 5.], requires_grad=True)

y = torch.tensor([8., 8.], requires_grad=True)

A=x*y

#calculate the gradient

A.backward()

print(x.grad)

print(y.grad)It will give the following output:

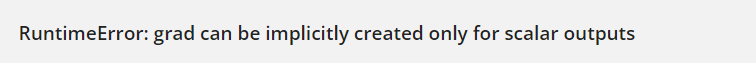

loss.backward() # Raises RuntimeError: grad can be implicitly created only for scalar outputs

This, for instance, has the tensor y, which is nonscalar (vector). The error is thrown in PyTorch as it does not have the capability for implicit selection concerning which element of the gradients is to be calculated.

Why does this RuntimeError: grad can, be implicitly created only for scalar outputs to occur?

The “RuntimeError: These deep learning frameworks often have multiple reasons that cause an error that reads “grad can be implicitly created only for scalar outputs.”

Non-Scalar Output

The first reason stems from computing gradients concerning a non-scalar output tensor. Due to this, the resulting output would be non-scalar and may involve a vector or a tensor with more than one element requiring complicated computations of subsequent gradients.

Ambiguity in Chain Rule

Another source of RuntimeError: grad can be implicitly created only for scalar outputs error is the ambiguity involved in the calculus chain rule, which formulates the basis of backpropagation. However, the chain rules consist of multiplying gradients across the computation.

But, in the case of non-scalar outputs, the chain rule becomes unclear because there could be many possible gradients concerning various components of the output tensor. The erroneousness arises because this ambiguous situation poses difficulties for the automatic differentiation scheme.

Implicit Gradient Creation

One important concept in auto differentiation frameworks like PyTorch is implicit gradient creation. The clarity in the framework needs to be more sufficient to implicitly determine which particular gradient should be computed when dealing with non-scalar outputs. The occurrence of a runtime error develops as a result of this absence of specific directions regarding gradient computation.

Incompatibility with Backpropagation Principles

This mistake, however, is partly a result of an even deeper problem relating to poor understanding associated with backward-passing. However, it will be quite challenging to ascertain how the model parameters need improvement, given that no scalar output is in place. The lack of directionality contradicts the basis for the backpropagation algorithm.

Missing Reduction Operations

However, a significant thing that is mostly neglected is the lack of scaling operations like sum(), mean, etc., to consolidate the non-scalar out into a scalar figure, and these sets of reductions are important in that they provide one scalar for defining unique-gradient in relation. Not taking advantage of these operations means that the framework has not necessarily been able to create gradients for non-scalar outputted values automatically.

How do you resolve RuntimeError: grad can be implicitly created only for scalar outputs?

To resolve the RuntimeError: grad can be implicitly created only for scalar outputs the solutions are mentioned below.

Aggregating non scalar output to a scalar value

Resolving the “RuntimeError: grad can be implicitly created only for scalar outputs” error involves ensuring that the non-scalar output operation is compatible with the automatic differentiation framework.

One common strategy is to aggregate the non-scalar output into a scalar value using reduction operations such as sum() or mean(). Below is the code using that method.

Syntax:

import torch

x = torch.tensor([1.0, 2.0, 3.0], requires_grad=True)

y = x * 2

loss = y.sum()

loss.backward()

Using gradient

Another way to resolve RuntimeError: grad can be implicitly created only for scalar outputs error is to compute gradient for each elements this will resolve the error. Below is an example with such:

import torch

x = torch.tensor([5., 5.], requires_grad=True)

y = torch.tensor([8., 8.], requires_grad=True)

A = x * y

# Calculate the gradient

A.backward(gradient=torch.tensor([1., 1.]))

# Print the gradients

print(x.grad)

print(y.grad)FAQs

Why must PyTorch have scalar outputs during gradient computation?

Most deep learning frameworks, including PyTorch, use automatic differentiation for backpropagation. Computing gradients is easier with scalar outputs because it directs the framework step by step through all gradients calculated.

Besides sum(), can I use other reduction operations to solve the error?

You could use other reduction operations such as mean() or any operation that reduces a tensor to a single scalar value apart from sum(). The trick lies in making the output only one number to be used for backpropagation purposes.

Conclusion

In the world of deep learning, errors are an integral part of model development. The error “RuntimeError: grad can be implicitly created only for scalar outputs” shows why matching operations to what automatic differentiation frameworks like Pytorch expect is important. By creating non-scalar outputs that are scalars, programmers can avoid this error and continue building better models for deep learning more effectively and quickly.