In this article, we will explain what the ConvergenceWarning: lbfgs failed to converge (status=1) means, what causes it, and how to resolve it. This is a common warning you may encounter while training your models of machine learning in sci-kit-learn.

Let us look and try to understand the cause of the ConvergenceWarning: lbfgs failed to converge (status=1) and the ways to rectify it.

Contents

What is the ConvergenceWarning: lbfgs failed to converge (status=1) error about?

This warning indicates the issues related to model optimization using an algorithm called lbfgs. The lbfgs algorithm implies limited memory Broyden Fletcher Goldfarb Shanno.

This solver of scikit-learn helps in the easy converging of smaller datasets and increases the number of maximum iterations in successfully executing code and resolving any warnings.

How ConvergenceWarning: lbfgs failed to converge (status=1) error is generated?

The ConvergenceWarning: lbfgs failed to converge (status=1) warning is generated in scikit-learn when the lbfgs algorithm fails convergence. Different factors are responsible for generating ConvergenceWarning: lbfgs failed to converge (status=1) warning. Let’s take a look at them.

- Inadequate preprocessing or feature scaling of machine learning model.

- Instability of ML model due to insufficiency of regularization.

- If tuning is needed by the available parameters or learning rate.

- The model shows overfitting on high-variance or noisy data.

- Poor architecture of a machine-earning model.

- Presence of bugs or any malfunction in data.

Now, if your model deals with any similar conditions mentioned above, it’s likely to show this warning in the code. Any instability or problem in the optimization of the model will result in non-convergence. Let’s look at an illustration for a better understanding.

from sklearn.datasets import load_iris

from sklearn.linear_model import LogisticRegressionWe have imported load_iris, a sicikit-learn function, and logistic regression from the scikit-learn module. Now, load the parameters and apply logistic regression. By using this, we tried predicting the probability of the parameters employing logistic regression

X, y = load_iris(return_X_y=True)

data = LogisticRegression(random_state=1).fit(X, y)

print(data.predict(X[:5, :]))We will get the required output here, but this example will throw a warning.

[0 0 0 0 0]

0.9733333333333334

/usr/local/lib/python3.10/dist-packages/sklearn/linear_model/_logistic.py:458: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.Why was this warning generated? This is because the solver, lbfgs in scikit-learn failed the convergence. The lbfgs algorithm also helps track the error at each repetition to look for ways to improve. The convergence can be failed in the optimization of lbfgs due to these reasons:

- A drop in error reduction results in the failure of the convergence.

- The solver, lbfgs, is set for a specific number of iterations.

- In the machine learning model, scikit-learn is responsible for checking and rectifying the condition, and then, according to different circumstances, the warning is raised.

- Any non-optimal parameters present in working code are also returned.

These factors are responsible for warning in Logistic Regression or Linear SVC cases.

How do you resolve ConvergenceWarning: lbfgs failed to converge (status=1) error?

The following issue can be resolved by simply increasing the number of iterations to the maximum for convergence. In your code, you can use a keyword argument defining the maximal number of iterations. The argument is coordinated to a hundred maximum iterations by default.

LogisticRegression

To resolve the ConvergenceWarning: lbfgs failed to converge (status=1) error one of the common step is to use the LogisticRegression method.

from sklearn.datasets import load_iris

from sklearn.linear_model import LogisticRegression

X, y = load_iris(return_X_y=True)

data = LogisticRegression(random_state=1).fit(X, y)

print(data.predict(X[:5, :]))

data.predict_proba(X[:5, :])

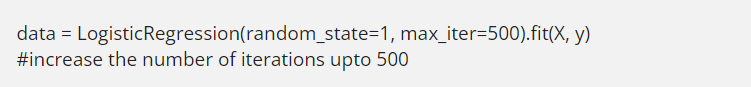

print(data.score(X, y))In this code, take a look at the 4th line, this is the line from which you are getting the ConvergenceWarning: lbfgs failed to converge (status=1) warning, so replace this line with the following line:

data = LogisticRegression(random_state=1, max_iter=500).fit(X, y)

#increase the number of iterations upto 500

The code will be executed successfully.

Linear SVC module

Apart from logistic regression, these warnings can also be generated using linear SVC. So, if you are getting a warning while using Linear Svc, check below to rectify your problem. Let’s check the same issue in Linear Support Vector Classification. First, import all the required modules from the sklearn.

from sklearn.svm import LinearSVC

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.datasets import make_classificationNow load the respective parameter. Here, the dual argument is responsible for solving the problem related to optimization. You must have noticed that we did not use the max_iter argument that is because the number of iterations in Linear Support Vector Classification is set to 1000 by default.

X, y = make_classification(n_features=5, random_state=1)

data = make_pipeline(

StandardScaler(), LinearSVC(random_state=1, tol=1e-6, dual=False)

)

print(data)The following will be the result:

Pipeline(steps=[('standardscaler', StandardScaler()),

('linearsvc',

LinearSVC(dual=False, random_state=1, tol=1e-06))])FAQs

Can the limit for iterations in lbfgs be increased?

Yes, you can increase the number of iterations in your code. You can use the max_iter keyword argument to do so. The solver will ultimately increase the number of iterations for converging.

Why is there a need to correct this warning?

This is because if you leave the warning as it is, it will result in poor model performance. Due to less optimization, the model cannot predict accurately.

Conclusion

To conclude, ConvergenceWarning: lbfgs failed to converge (status=1) can be rectified using the simple ways listed above. By understanding the causes for the generation of this warning, you can resolve the error and the issues related to convergence.

References

To learn more about fixes for common mistakes when writing in Python, follow us at Python Clear.