Have you ever been stumped by the perplexing “RuntimeError: Element 0 of Tensors Does Not Require Grad and Does Not Have a Grad_fn” error while working with PyTorch or another deep learning framework? If so, you’re not alone. This article will give you a walkthrough regarding the in-depth understanding of this error, its root causes, and, most importantly, how to resolve it effectively.

Contents

What Causes the “RuntimeError: Element 0 of Tensors Does Not Require Grad and Does Not Have a Grad_fn”?

Before we jump into the solution, let’s understand why the RuntimeError: Element 0 of Tensors Does Not Require Grad and Does Not Have a Grad_fn occurs. At its core, this error is related to the automatic differentiation feature in deep learning frameworks like PyTorch. Deep learning models use a technique called backpropagation to compute gradients of the loss function concerning the model’s parameters. This process allows the model to update its parameters through optimization algorithms like stochastic gradient descent (SGD).

Syntax:-

# Automatic differentiation and gradient computation

import torch

# Define a tensor with requires_grad set to True

tensor_with_grad = torch.tensor([2.0, 3.0, 4.0], requires_grad=True)

# Perform some operations

result = tensor_with_grad * 5

# Check if a gradient function (grad_fn) exists

print("Does it have a grad_fn?", result.grad_fn is not None)However, not all tensors in a deep-learning model need gradients. Most tensors, such as input data or fixed parameters, don’t require gradients because you don’t intend to update them during training. PyTorch tracks this information using a computational graph, and it throws the “RuntimeError” when it detects that you’re trying to compute gradients for a tensor that doesn’t require them.

Syntax:-

import torch

# Create tensors that do not require gradients

input_data = torch.tensor([1.0, 2.0, 3.0], requires_grad=False)

fixed_parameters = torch.tensor([0.1, 0.2, 0.3], requires_grad=False)

Let’s break this down further:

Element 0 of Tensors

The RuntimeError: Element 0 of Tensors Does Not Require Grad and Does Not Have a Grad_fn message specifically mentions “Element 0,” but this could be any element of a tensor. PyTorch is pointing out the first element that triggers this issue, which can help you pinpoint the source of the problem.

Does Not Require Grad

This part indicates that the tensor in question was not set to require gradients. In PyTorch, you can control whether a tensor needs gradients by setting its ‘requires_grad’ attribute. By default, most tensors have this attribute set to ‘False’.

Does Not Have a Grad_fn

In PyTorch’s computational graph, each operation that involves tensors creates a new node in the graph with a corresponding gradient function (grad_fn). This function is responsible for computing gradients during backpropagation. When a tensor lacks a grad_fn, PyTorch can’t compute its gradient.

How to Resolve the “RuntimeError: Element 0 of Tensors Does Not Require Grad and Does Not Have a Grad_fn”?

Now since we have a clear understanding of the error, let’s explore how to resolve it. To fix this issue, you must ensure that the tensor causing the error is correctly configured for gradient computation. Here’s a step-by-step guide:

Check the Problematic Tensor

Start by identifying the tensor that’s causing the error. PyTorch typically provides a traceback to help you locate the problematic line of code.

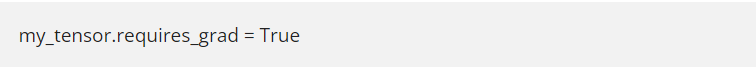

Set requires_grad to True

If the tensor should participate in gradient computation, set its ‘requires_grad’ attribute to ‘True’. For example, if you have a tensor named ‘my_tensor’, you can do this:

Verify Operations

Ensure that all operations involving this tensor are differentiable. If you’re using custom operations or non-differentiable functions, you might need to make adjustments or use alternatives.

Check Input Data

If the problematic tensor is an input data tensor, ensure it’s not marked as requiring gradients. Input data tensors should not require gradients. They should have ‘requires_grad’ set to ‘False’.

Update the Model

If you’re working with a deep learning model, verify that your model’s architecture and data flow are correctly set up. Common issues involve attaching the loss function to tensors that shouldn’t be part of the optimization process.

Let’s illustrate this with a practical example. Suppose you have a simple neural network with a non-trainable tensor named ‘fixed_tensor’:

Syntax:

import torch

# Create a fixed tensor

fixed_tensor = torch.tensor([1.0, 2.0, 3.0], requires_grad=False)

# Attempting an operation that requires gradients

result = fixed_tensor * 2In this case, you’ll encounter the “RuntimeError” since ‘fixed_tensor’ doesn’t require gradients. To fix this, you should either set the‘requires_grad’ to ‘True’ if you want it to participate in backpropagation or reconsider whether it should be part of the gradient computation process.

FAQs

What if I want to exclude a specific tensor from gradient computation in my model?

If you intentionally want a tensor excluded from gradient computation, you can set its ‘requires_grad’ attribute to ‘False’. Just make sure it’s not involved in operations that require gradients.

Can I selectively enable or disable gradient computation for specific tensors during training?

Yes, you can enable or disable gradient computation for tensors dynamically during training. Change the ‘requires_grad’ attribute at different stages of your training loop.

Conclusion

The “RuntimeError: Element 0 of Tensors Does Not Require Grad and Does Not Have a Grad_fn” error can be puzzling when you first encounter it, but it’s a crucial part of PyTorch’s automatic differentiation system. Understanding the causes and how to fix them is essential for successful deep learning model development. Remember to configure your tensors correctly, set the ‘requires_grad' as needed, and ensure that your model’s data flow aligns with the desired gradient computation. With these steps, you’ll be well on your way to resolving this error and building better deep learning models.

Reference

Learn more about error handling at PythonClear